Understanding TCO on Databricks

Understanding the worth of your AI and knowledge investments is essential—but over 52% of enterprises fail to measure Return on Funding (ROI) rigorously [Futurum]. Full ROI visibility requires connecting platform utilization and cloud infrastructure into a transparent monetary image. Usually, the information is on the market however fragmented, as right now’s knowledge platforms should help a rising vary of storage and compute architectures.

On Databricks, prospects are managing multicloud, multi-workload and multi-team environments. In these environments, having a constant, complete view of price is crucial for making knowledgeable choices.

On the core of price visibility on platforms like Databricks is the idea of Whole Value of Possession (TCO).

On multicloud knowledge platforms, like Databricks, TCO consists of two core parts:

- Platform prices, comparable to compute and managed storage, are prices incurred by direct utilization of Databricks merchandise.

- Cloud infrastructure prices, comparable to digital machines, storage, and networking costs, are prices incurred by the underlying utilization of cloud providers wanted to help Databricks.

Understanding TCO is simplified when utilizing serverless merchandise. As a result of compute is managed by Databricks, the cloud infrastructure prices are bundled into the Databricks prices, providing you with centralized price visibility instantly in Databricks system tables (although storage prices will nonetheless be with the cloud supplier).

Understanding TCO for traditional compute merchandise, nonetheless, is extra complicated. Right here, prospects handle compute instantly with the cloud supplier, that means each Databricks platform prices and cloud infrastructure prices have to be reconciled. In these circumstances, there are two distinct knowledge sources to be resolved:

- System tables (AWS | AZURE | GCP) in Databricks will present operational workload-level metadata and Databricks utilization.

- Value stories from the cloud supplier will element prices on cloud infrastructure, together with reductions.

Collectively, these sources kind the total TCO view. As your surroundings grows throughout many clusters, jobs, and cloud accounts, understanding these datasets turns into a important a part of price observability and monetary governance.

The Complexity of TCO

The complexity of measuring your Databricks TCO is compounded by the disparate methods cloud suppliers expose and report price knowledge. Understanding how you can be part of these datasets with system tables to supply correct price KPIs requires deep data of cloud billing mechanics–data many Databricks-focused platform admins might not have. Right here, we deep dive on measuring your TCO for Azure Databricks and Databricks on AWS.

Azure Databricks: Leveraging First-Get together Billing Information

As a result of Azure Databricks is a first-party service throughout the Microsoft Azure ecosystem, Databricks-related costs seem instantly in Azure Value Administration alongside different Azure providers, even together with Databricks-specific tags. Databricks prices seem within the Azure Value evaluation UI and as Value administration knowledge.

Nonetheless, Azure Value Administration knowledge won’t include the deeper workload-level metadata and efficiency metrics present in Databricks system tables. Thus, many organizations search to carry Azure billing exports into Databricks.

But, to totally be part of these two knowledge sources is time-consuming and requires deep area data–an effort that the majority prospects merely haven’t got time to outline, keep and replicate. A number of challenges contribute to this:

- Infrastructure should be arrange for automated price exports to ADLS, which may then be referenced and queried instantly in Databricks.

- Azure price knowledge is aggregated and refreshed each day, in contrast to system tables, that are on the order of hours – knowledge should be rigorously deduplicated and timestamps matched.

- Becoming a member of the 2 sources requires parsing high-cardinality Azure tag knowledge and figuring out the suitable be part of key (e.g., ClusterId).

Databricks on AWS: Aligning Market and Infrastructure Prices

On AWS, whereas Databricks prices do seem within the Value and Utilization Report (CUR) and in AWS Value Explorer, prices are represented at a extra aggregated, SKU-level, in contrast to Azure. Furthermore, Databricks prices seem solely in CUR when Databricks is bought by the AWS Market; in any other case, CUR will replicate solely AWS infrastructure prices.

On this case, understanding how you can co-analyze AWS CUR alongside system tables is much more important for patrons with AWS environments. This permits groups to research infrastructure spend, DBU utilization and reductions along with cluster-and workload-level context, making a extra full TCO view throughout AWS accounts and areas.

But, becoming a member of AWS CUR with system tables will also be difficult. Frequent ache factors embody:

- Infrastructure should help recurring CUR reprocessing, since AWS refreshes and replaces price knowledge a number of instances per day (with no main key) for the present month and any prior billing interval with adjustments.

- AWS price knowledge spans a number of line merchandise varieties and value fields, requiring consideration to pick the right efficient price per utilization sort (On-Demand, Financial savings Plan, Reserved Situations) earlier than aggregation.

- Becoming a member of CUR with Databricks metadata requires cautious attribution, as cardinality will be totally different, e.g., shared all-purpose clusters are represented as a single AWS utilization row however can map to a number of jobs in system tables.

Simplifying Databricks TCO calculations

In production-scale Databricks environments, price questions shortly transfer past total spend. Groups wish to perceive price in context—how infrastructure and platform utilization connect with actual workloads and choices. Frequent questions embody:

- How does the overall price of a serverless job benchmark in opposition to a traditional job?

- Which clusters, jobs, and warehouses are the most important customers of cloud-managed VMs?

- How do price developments change as workloads scale, shift, or consolidate?

Answering these questions requires bringing collectively monetary knowledge from cloud suppliers with operational metadata from Databricks. But as described above, groups want to take care of bespoke pipelines and an in depth data base of cloud and Databricks billing to perform this.

To help this want, Databricks is introducing the Cloud Infra Value Discipline Resolution —an open supply resolution that automates ingestion and unified evaluation of cloud infrastructure and Databricks utilization knowledge, contained in the Databricks Platform.

By offering a unified basis for TCO evaluation throughout Databricks serverless and traditional compute environments, the Discipline Resolution helps organizations acquire clearer price visibility and perceive architectural trade-offs. Engineering groups can observe cloud spend and reductions, whereas finance groups can determine the enterprise context and possession of prime price drivers.

Within the subsequent part, we’ll stroll by how the answer works and how you can get began.

Technical Resolution Breakdown

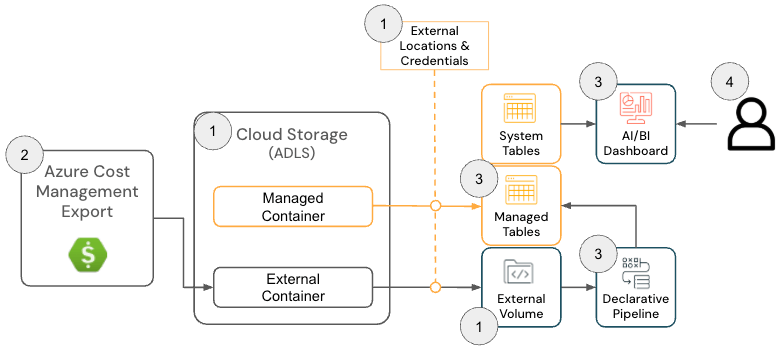

Though the parts might have totally different names, the Cloud Infra Value Discipline Resolution for each Azure and AWS prospects share the identical ideas, and will be damaged down into the next parts:

Each the AWS and Azure Discipline Options are glorious for organizations that function inside a single cloud, however they will also be mixed for multicloud Databricks prospects utilizing Delta Sharing.

Azure Databricks Discipline Resolution

The Cloud Infra Value Discipline Resolution for Azure Databricks consists of the next structure parts:

Azure Databricks Resolution Structure

To deploy this resolution, admins should have the next permissions throughout Azure and Databricks:

- Azure

- Permissions to create an Azure Value Export

- Permissions to create the next sources inside a Useful resource Group:

- Databricks

- Permission to create the next sources:

- Storage Credential

- Exterior Location

- Permission to create the next sources:

The GitHub repository offers extra detailed setup directions; nonetheless, at a excessive stage, the answer for Azure Databricks has the next steps:

- [Terraform] Deploy Terraform to configure dependent parts, together with a Storage Account, Exterior Location and Quantity

- The aim of this step is to configure a location the place the Azure Billing knowledge is exported so it may be learn by Databricks. This step is non-obligatory if there’s a preexisting Quantity for the reason that Azure Value Administration Export location will be configured within the subsequent step.

-

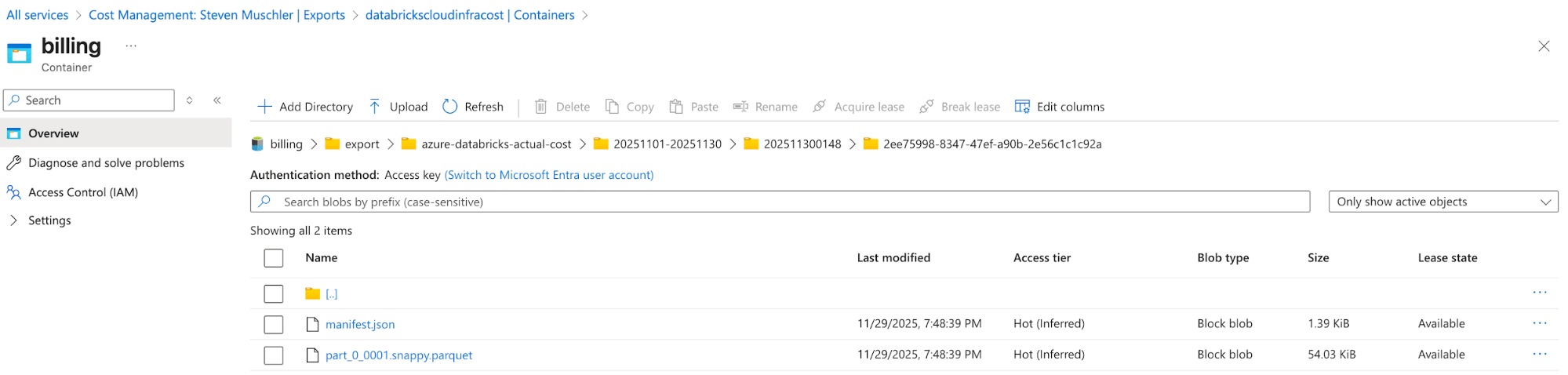

[Azure] Configure Azure Value Administration Export to export Azure Billing knowledge to the Storage Account and make sure knowledge is efficiently exporting

- The aim of this step is to make use of the Azure Value Administration’s Export performance to make the Azure Billing knowledge out there in an easy-to-consume format (e.g., Parquet).

Storage Account with Azure Value Administration Export Configured

Azure Value Administration Export mechanically delivers price information to this location - [Databricks] Databricks Asset Bundle (DAB) Configuration to deploy a Lakeflow Job, Spark Declarative Pipeline and AI/BI Dashboard

- The aim of this step is to ingest and mannequin Azure billing knowledge for visualization utilizing an AI/BI dashboard.

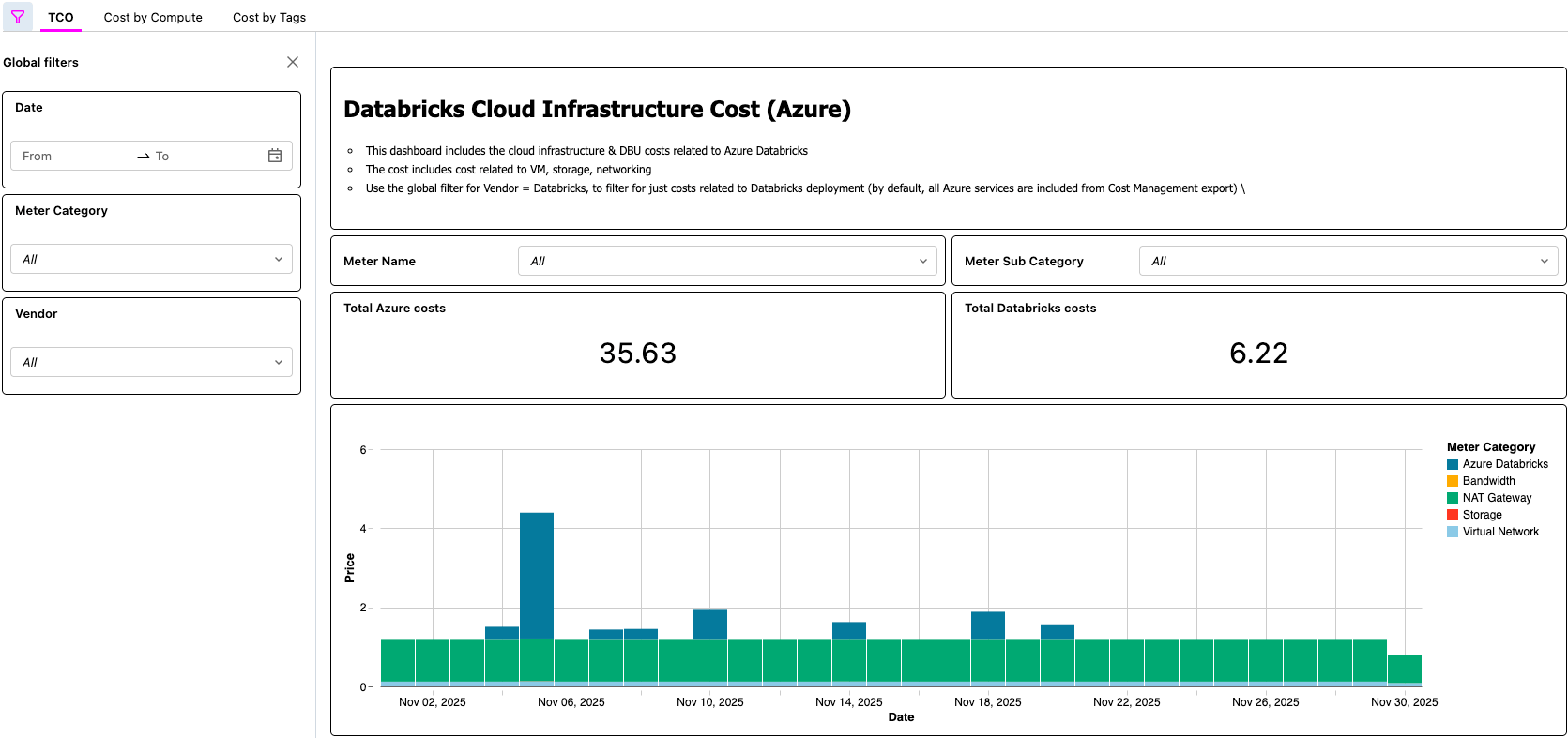

- [Databricks] Validate knowledge within the AI/BI Dashboard and validate the Lakeflow Job

- This remaining step is the place the worth is realized. Clients now have an automatic course of that allows them to view the TCO of their Lakehouse structure!

AI/BI Dashboard Displaying Azure Databricks TCO

Databricks on AWS Resolution

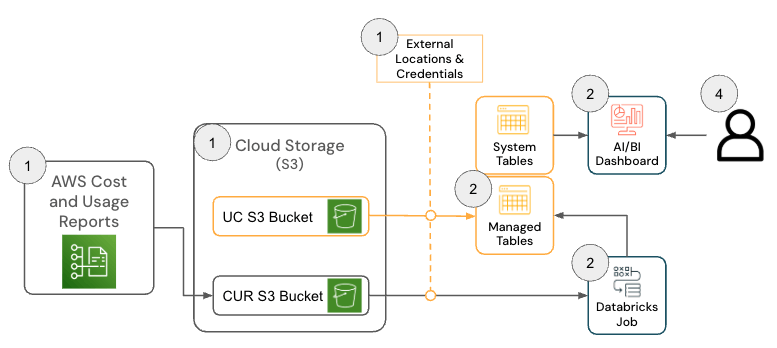

The answer for Databricks on AWS consists of a number of structure parts that work collectively to ingest AWS Value & Utilization Report (CUR) 2.0 knowledge and persist it in Databricks utilizing the medallion structure.

To deploy this resolution, the next permissions and configurations should be in place throughout AWS and Databricks:

- AWS

- Permissions to create a CUR

- Permissions to create an Amazon S3 bucket (or permissions to deploy the CUR in a present bucket)

- Word: The answer requires AWS CUR 2.0. When you nonetheless have a CUR 1.0 export, AWS documentation offers the required steps to improve.

- Databricks

- Permission to create the next sources:

- Storage Credential

- Exterior Location

- Permission to create the next sources:

The GitHub repository offers extra detailed setup directions; nonetheless, at a excessive stage, the answer for AWS Databricks has the next steps.

- [AWS] AWS Value & Utilization Report (CUR) 2.0 Setup

- The aim of this step is to leverage AWS CUR performance in order that the AWS billing knowledge is on the market in an easy-to-consume format.

- [Databricks] Databricks Asset Bundle (DAB) Configuration

- The aim of this step is to ingest and mannequin the AWS billing knowledge in order that it may be visualized utilizing an AI/BI dashboard.

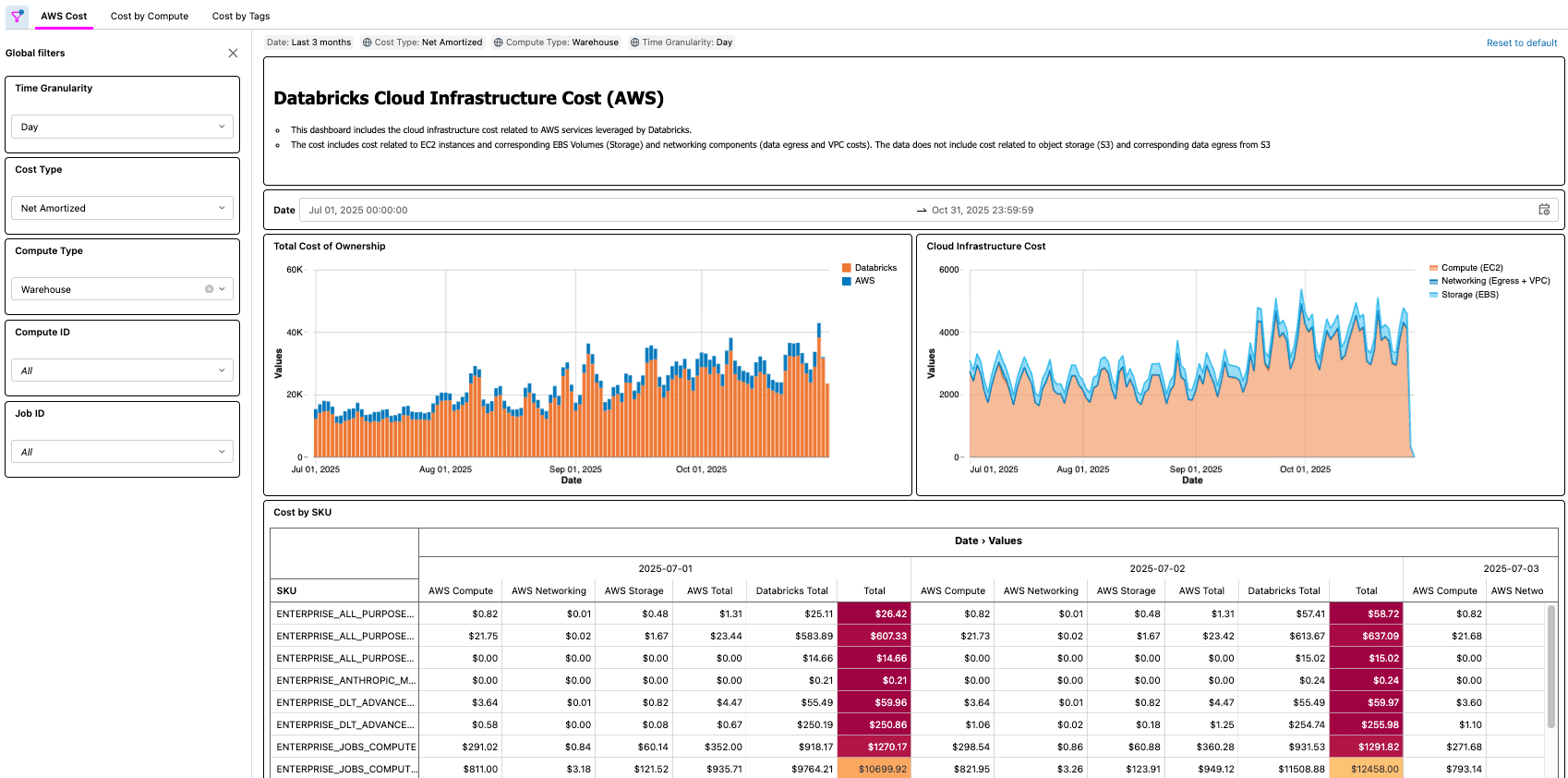

- [Databricks] Overview Dashboard and validate Lakeflow Job

- This remaining step is the place the worth is realized. Clients now have an automatic course of that makes the TCO of their lakehouse structure out there to them!

Actual-World Situations

As demonstrated with each Azure and AWS options, there are numerous real-world examples {that a} resolution like this permits, comparable to:

- Figuring out and calculating complete cost-savings after optimizing a job with low CPU and/or Reminiscence

- Figuring out workloads working on VM varieties that wouldn’t have a reservation

- Figuring out workloads with abnormally excessive networking and/or native storage price

As a sensible instance, a FinOps practitioner at a big group with hundreds of workloads is perhaps tasked with discovering low hanging fruit for optimization by searching for workloads that price a specific amount, however that even have low CPU and/or reminiscence utilization. Because the group’s TCO data is now surfaced by way of the Cloud Infra Value Discipline Resolution, the practitioner can then be part of that knowledge to the Node Timeline System Desk (AWS, AZURE, GCP) to floor this data and precisely quantify the price financial savings as soon as the optimizations are full. The questions that matter most will rely upon every buyer’s enterprise wants. For instance, Common Motors makes use of any such resolution to reply lots of the questions above and extra to make sure they’re getting the utmost worth from their lakehouse structure.

Key Takeaways

After implementing the Cloud Infra Value Discipline Resolution, organizations acquire a single, trusted TCO view that mixes Databricks and associated cloud infrastructure spend, eliminating the necessity for handbook price reconciliation throughout platforms. Examples of questions you’ll be able to reply utilizing the answer embody:

- What’s the breakdown of price for my Databricks utilization throughout the cloud supplier and Databricks?

- What’s the complete price of working a workload, together with VM, native storage, and networking prices?

- What’s the distinction in complete price of a workload when it runs on serverless vs when it runs on traditional compute

Platform and FinOps groups can drill into full prices by workspace, workload and enterprise unit instantly in Databricks, making it far simpler to align utilization with budgets, accountability fashions, and FinOps practices. As a result of all underlying knowledge is on the market as ruled tables, groups can construct their very own price purposes—dashboards, inside apps or use built-in AI assistants like Databricks Genie—accelerating perception era and turning FinOps from a periodic reporting train into an always-on, operational functionality.

Subsequent Steps & Assets

Deploy the Cloud Infra Value Discipline Resolution right now from GitHub (hyperlink right here, out there on AWS and Azure), and get full visibility into your complete Databricks spend. With full visibility in place, you’ll be able to optimize your Databricks prices, together with contemplating serverless for automated infrastructure administration.

The dashboard and pipeline created as a part of this resolution provide a quick and efficient strategy to start analyzing Databricks spend alongside the remainder of your infrastructure prices. Nonetheless, each group allocates and interprets costs in another way, so you could select to additional tailor the fashions and transformations to your wants. Frequent extensions embody becoming a member of infrastructure price knowledge with extra Databricks System Tables (AWS | AZURE | GCP) to enhance attribution accuracy, constructing logic to separate or reallocate shared VM prices when utilizing occasion swimming pools, modeling VM reservations in another way or incorporating historic backfills to help long-term price trending. As with every hyperscaler price mannequin, there’s substantial room to customise the pipelines past the default implementation to align with inside reporting, tagging methods and FinOps necessities.

Databricks Supply Options Architects (DSAs) speed up Information and AI initiatives throughout organizations. They supply architectural management, optimize platforms for price and efficiency, improve developer expertise, and drive profitable mission execution. DSAs bridge the hole between preliminary deployment and production-grade options, working carefully with numerous groups, together with knowledge engineering, technical leads, executives, and different stakeholders to make sure tailor-made options and sooner time to worth. To profit from a customized execution plan, strategic steering and help all through your knowledge and AI journey from a DSA, please contact your Databricks Account Crew.